Linux Only Boots Under Recovery Mode

This is part of the Plan.

I tried to install linux for my PC, and tried all sorts of distributions, including the most recent 3-4 versions of Mint and Ubuntu, but all of them (including live CD mode) only runs under recovery mode (with really low resolution and software-only rendering), which makes me MEGA unhappy. When I tried to boot them normally, all I get are black screens, or more precisely, purple screens.

After consulting online, I found that the only special thing about recovery mode is that it added nomodeset and xforcevesa into the GRUB_CMDLINE_LINUX parameter of grub file located at /etc/default/grub.

So, basically, the problem was solved by finding out which of the two parameters recovery mode added into GRUB_CMDLINE_LINUX made the system bootable again. It turned out it was the xforcevesa that solved the problem, and here is what I did:

- If the live CD mode failed to load, enter the recovery mode of live CD. (Mint by directly selecting the second option, Ubuntu by pressing F6)

- Now you should be able to get into the system no matter what happens to your system before. Install them as usual, and use "logical partition" since this is probably the second system on the PC. Install the boot on the entire hard drive.

- Restart and get into the system in recovery mode (second option in grub, select

continuewhen option menu occurs). Open the terminal, and here's the most important part:

sudo gedit /etc/default/grub

- Edit the grub file, replace the parameter in

GRUB_CMDLINE_LINUX=<PARAMETERS>toxforcevesa, save and close the file. Hold on, we are not done yet, always remember to:

sudo update-grub

Then, restart your computer and you should be able to boot the system normally.

Great, problem solved! Wow, very Mint, so Ubuntu.

There is still another thing bothering me, though, that is I can only boot into system successfully without any USB devices plugged in, which is superconfusing. I will try to figure this out.

The Quest to Hash Table

Several mistakes I made when I tried to write a hash program using C.

-

A pointer that points to a pointer that points to a pointer that points to a pointer that points to a...

Generating an array of pointers is the core step when creating a hash table, but the malloc function and the pointer part can be a little bit confusing. There are, however, general rules for this:

- How many

*s we put before a variable tells us how many dereferences we need to get the original type we want. If we want to make an array of*node(or some other pointer/type of variables), then we should add one extra*when declaring the array:node **node; - General formula for using malloc to collect an array containing

NSLOTpointers to node:array_of_pointers = (node **)malloc(NSLOTS * sizeof(node *)). The rule still holds if we add more*to each node or subtract*simultaneously:array_of_pointers = (node ***)malloc(NSLOTS * sizeof(node **)).

Do note that

array_of_pointers = (node **)malloc(NSLOTS * sizeof(node *))is equivalent tonode **array_of_pointers = malloc(NSLOTS * sizeof(node *)). Don't be afraid to use index on objects created this way: it's fine... - How many

-

Be mindful when you add node to one of the linked lists, more so when you are freeing it. Do note that, when you are checking whether a key is already present in the hash table, if a the key already exists in the hash table, you will have an extra copy of the key. Make sure it is freed. Also do not forget to free the key afterwards.

Apologies if this seemed unfinished XDD.

I started writing this stuff five months ago and just did not got a chance to finish it till now... Tried to pull off some points from the original code I had, but this is pretty much all I got now XDD.

My Server Setups and Whatnot

Why move the blog? And to where?

After putting up with the clunky WordPress blog (and Bluehost's 2003-looking admin panel for that matter) for three years, I finally decided to ditch everything I currently have and restart my blog in a more civilized manner. There was a couple of things that I was not happy about my old WordPress setup, namely:

- Clunky and eats up my server storage.

- Not as easy way to back up with tools I know.

- Does not come with a command line interface, which is becoming my preferred way of doing almost anything.

- Lacking some basic features I wanted, i.e. multilingual support. As powerful as WordPress may be in the right hands, I do not want to invest too much effort in learning CSS/js/php nor do I want to use some plugin from some sketchy WordPress plugin marketplace.

- These theme and plugin marketplaces creeps me out in the same way as ubuntu software center.

- WordPress has a lot of features I do not actually need, i.e. user permission system, which is an overkill for my personal blog site.

Picking an alternative blogging system was not too hard once I am aware of my needs: a fast and minimalist static site generator implemented in a language I know (or I found valuable to learn) with out-of-the-box multilingual support, a.k.a. hugo.

As for hosting services, I considered github pages and netlify to be fast and easy solutions but I want something more substantial for a personal blog, like a VPS. Besides, github pages not supporting https for custom domains is a deal breaker for me. I filtered down the list of VPS hosting providers with Arch Linux support and I ended up with DigitalOcean. Since I wanted to completely sever my connection with Bluehost, I also moved my domain name host to Google Domains.

Install Arch Linux

Do note that Arch Linux is probably not the best suited server Linux distro. Use a non-rolling distro if stability is a concern. I use it only because I also run it on all my other computers. Backup the droplet often if you decided to go down this route: it hasn't happened to me yet but I've heard people complaining about Arch breaking too often.

Installation

Apparently my information on DigitalOcean supporting Arch Linux is outdated, as they stopped supporting it a while back. Thankfully, it is still not to hard to bring Arch Linux to a droplet (this is how DigitalOcean refer to a server) due to the awesome project digitalocean-debian-to-arch. All I needed to to was set up a droplet, ssh into the server, and follow the instructions:

# wget https://raw.githubusercontent.com/gh2o/digitalocean-debian-to-arch/debian9/install.sh -O install.sh

# bash install.sh

Low Level Setup

Once the script finishes running, I have an Arch Linux system running on my droplet with internet access. Most of the additional setups needed can be found in Arch Wiki. Since I am by no means a great tutorial writer, I suggest referring to Arch Wiki for detailed steps. The recorded commands here are just for book-keeping purposes and is by no means the best way to do things.

System Clock

Sync system clock and set time zone.

# timedatectl set-ntp true

# timedatectl settimezone <Region>/<City>

Base Packages

Install/update base packages.

# pacman -S base base-devel

Fstab

Generate fstab.

# genfstab -U / >> /etc/fstab

Set Locale

Uncomment en_US.UTF-8 UTF-8 in /etc/locale.conf then generate locale with:

locale-gen

Set LANG=en_US.UTF-8 in /etc/locale.conf.

Hostname

Edit /etc/hosts and add hostname of droplet:

127.0.1.1 <hostname>.localdomain <hostname>

Boot Loader and Initramfs

Optimizations for intel processors:

# pacman -S intel-ucode

# grub-mkconfig -o /boot/grub/grub.cfg

Add crc32 modules to initramfs, as otherwise the droplet fails to boot. Edit /etc/mkinitcpio.conf :

MODULES= "crc32 libcrc32c crc32c_generic crc32c-intel crc32-pclmul"

Regenerate the initramfs image.

# mkinitcpio -p linux

Root Password

You know the drill.

# passwd

User Setups

Here are some additional settings to make Arch Linux more useable.

Creature User

Obviously it is not a good idea to use root account:

# useradd -m -G wheel -s /bin/bash <username>

# passwd <username>

Add User to Sudoer

Edit /etc/sudoers and add:

<username> ALL=(ALL) ALL

Login As User

We will finish the rest of the configuration using the user account.

# su <username>

Package Manager

I used to use packer as wrapper around AUR and pacman. However, after learning about inherent insecurity in their package building processes, I switched to a more secure AUR helper trizen (pacaur is another choice, and fun fact: there is a reddit bot that tells you to switch to pacaur every time yaourt is mentioned in a post): trizen prompts user to inspect PKGBUILD, *.install and other scripts before sourcing them and trizen is written in Perl instead of Bash. To install trizen, first install dependencies via pacman according to its AUR Page, then clone its git repo to a local directory. Navigate to the directory containing PKGBUILD and run

$ makepkg

to make package and

$ pacman -U trizen-*.pkg.tzr.xz

to install trizen.

Useful Packages

Once package manager is in place, install packages to your heart's content! Some of my bread-and-butter packages include emacs (I installed the cli-only version, emacs-nox), tmux (terminal multiplexor, very useful), zsh, vim (for quick edits), and etc.

Security Related Stuff

Now that a usable Arch Linux installation is in place, I would employ some security measures before hosting my website on it.

Secure Login via ssh

On local machine, generate your ssh keypair:

$ ssh-keygen -t rsa

Send your ssh keys to server:

$ ssh-copy-id <username>@<server>

Now, on server, make the following edits to /etc/ssh/sshd_config :

PermitRootLogin no

ChallengeResponseAuthentication no

PasswordAuthentication no

UsePAM no

AllowUsers <username>

These changes will disable root login, disable password login and only allow specified user to login via ssh.

It is advisible to also change the default port (22) used for ssh connection, in the same file, specify port by (please remember this port selection):

port <non-std-port>

For these changes to take effect, restart ssh daemon:

$ sudo systemctl restart sshd.service

Keep this ssh session intact and attempt to start another ssh connection in local machine to see if the changes have taken effect (the original session is needed in case things are not working):

$ ssh -p <non-std-port> <username>@<server>

Firewall Settings

I use ufw as my firewall and it is very easy to setup. Install ufw with trizen and enable the desired ports:

$ trizen -S ufw

$ sudo ufw allow <port>/<protocol>

For instance, to allow ssh communication, allow 22/tcp or ssh (if you used a non-standard port, allow <non-std-port>/tcp). Some other useful ports are:

| Port | Usage |

|---|---|

80/tcp |

http |

443/tcp |

https |

143 |

imap access |

993 |

imap over ssl |

25 |

receive incoming mail |

587 |

smtp access (with or without ssl) |

To review the added ports and enable them:

$ sudo ufw show added

$ sudo ufw enable

Auto start up:

$ sudo systemctl enable ufw.service

Sync Server Time

Sync server time with ntp :

$ trizen -S ntp

$ sudo systemctl enable ntpd.service

Check time server status with:

$ ntpq -p

Setting up PTR Record

It turns out that DigitalOcean handles this automatically, all I needed to do is set the droplet name to a Fully Qualified Domain Name (FQDN), in this case shimmy1996.com. I then checked if the record is in place with:

$ dig -x <ip_address>

Firing up the Server

Next step would be actually preparing the server for serving contents.

Create Web Directory

Create a directory for serving web contents, a common choice would be:

$ mkdir ~/public_html

Make sure to give this directory (including the user home folder) appropriate permission with chmod (755 would normally work). Populate the directory with a simple index.html for testing if you want.

Instal nginx

Install nginx with trizen, and edit /etc/nginx/nginx.conf to set up http server (the one set to listen 80 default_server):

server_name www.<domainname> <domainname>

root /path/to/public_html

For the server_name line add as many as you want. You may want to put your mail server address on it as well so that you can generate a single ssl certificate for everything. After these changes are made, (re)start and enable nginx:

$ sudo systemctl restart nginx.service

$ sudo systemctl enable nginx.service

DNS Setup

The next step is to set up DNS records for our server. There are three types of records that need to be set up initially, NS, A, and CNAME. I also included some other useful records:

| Type | Hostname | Value | Usage |

|---|---|---|---|

NS |

@ | nameserver address | specifiec name server to use |

A |

@ | supplied IPv4 address | redirects host name to IPv4 address |

CNAME |

www (can be anything) | @ | sets www.<hostname> as an alias |

MX |

@ | mail server address | specifiec mail server to use |

CAA |

@ | authorizor of SSL certificate | prevents other authority from certifying SSL certificate |

In my case, though I use Google Domains to host my domain, I still use DigitalOcean's name server. So I needed to setup these records on DigitalOcean and NS records on Google Domains.

After this step, you website should be accessible via your domain name, although it may take a few hours for the DNS record to populate.

SSL Certificate

Let's Encrypt is a great project and certbot is an awesome tool for SSL certificate generation. Kudos to the nice folks at EFF and Linux Foundation. I simply followed the instructions on EFF site:

$ sudo pacman -S certbot-nginx

$ sudo certbot --nginx

To provide some extra credibility to the certificate, I added an CAA record in my DNS settings with issue authority granted for letsencrypt.org. For now Let's Encrypt does not support wildcard certificate but will be January 2018, and this is why I added a bunch of subdomains into my nginx.config (so that the certificate covers these subdomains as well).

What Now?

After a couple hours (mostly waiting for DNS records to populate), and my website is online again. With a VPS at my disposal, I also host my personal email now and I might organize my random notes pieced from various websites into a post as well. I am still trying to figure out an efficient workflow for writing multilingual post with org-mode in hugo and once I am convinced I have found an acceptable solution, I will also post it.

Spam or Ham

As planned, I am documenting my mail server setups. Setting up the mail server is probably documented everywhere, but I had to put in some effort make my setup secure enough to prevent it from been mistaked as spam.

Setting up the mail server

I really don't see how I can write anything better than this tutorial, so I will just document some of the steps that seemed missing from the tutorial.

Setting DNS Record

Before anything, I needed to setup my DNS record. I created an A record for my mail server address, and added a MX record indicating the mail will be handled by the mail server.

Creating Maildir

After setting up postfix for the first time, I needed to setup the Maildir manually and giving it appropriate permissions:

$ mkdir -p /home/<username>/Maildir/{cur,new,tmp}

$ chown <username> /home/<username>/Maildir/{,cur,new,tmp}

$ chmod 0755 /home/<username>/Maildir/{,cur,new,tmp}

SSL Certificate

In stead of using the built-in certificate generators in dovecot, I choose to use the same SSL certificate for my website. I added my mail server address to the server_name field in /etc/nginx/nginx.conf and generated my certificate with certbot. After that, I simply changed /etc/dovecot/conf.d/10-ssl.conf for dovecot :

use_ssl = yes

ssl_cert = </path/to/fullchain.pem

ssl_key = </path/to/privkey.pem

Similarly for postfix I also used this certificate. Do note that dovecot and postfix should be run as root to have read permissions to read these certificates.

Mail Client

I am using Thunderbird as my mail client and for receiving mail. I used SSL/TLS while for sending mail, I needed to set STARTTLS.

Security Measures

After completing the email setup, I immediately tested the server by sending test emails, only to find them been tossed straight into spam by gmail. It seems that gmail has a new feature that shows the security check status on the email (accessible by 'View Original'). These measures include SPF, DKIM and DMARC. My avatar showed up as an octagon with a question mark, indicating the mail server failing the basic SPF check. In order to avoid this, I took a bunch of security measures to tick all the boxes from email security test sites like intodns and mxtoolbox.

Sender Policy Framework (SPF)

An SPF TXT record documents the allowed servers to send emails on behalf of this address. In my case where only mail servers documented in the MX TXT record are used, I simply put in:

v=spf1 mx -all

DomainKeys Identified Mail (DKIM)

I am using opendkim to sign and verify that emails are indeed from my server. After installing the opendkim package, I followed the instruction in Arch Wiki. First copy example configuration file from /etc/opendkim/opendkim.conf.sample to /etc/opendkim/opendkim.conf and edit (socket selection can be arbitrary):

Domain <domainname>

KeyFile /path/to/keys.private

Selector <myselector>

Socket inet:<dkimsocket>@localhost

UserID opendkim

Conicalization relaxed/simple

Next, in the specified keyfile directory (the default is /var/db/dkim/), generate keys with:

$ opendkim-genkey -r -s <myselector> -d <domainname> --bits=2048

Along with the generated .private file is a .txt file with the necessary TXT record for DKIM. It basically posts the public key for your mail server. Note that the TXT record may need to be broke down into several strings to comply with the 255 character limit. To check if the TXT record has been properly setup, I used (requires package dnsutils ):

$ host -t TXT <myselector>._domainkey.<domainname>

The final step would be to start the opendkim service and make sure postfix performs the encryption upon sending email. Edit /etc/postfix/main.cf to be:

non_smtpd_milters=inet:127.0.0.1:<dkimsocket>

smtpd_milters=inet:127.0.0.1:<dkimsocket>

After reloading postfix, DKIM should be in effect.

Domain-based Message Authentication, Reporting and Conformance (DMARC)

Without surprise, there is a package opendmarc that implements DMARC and there is also an Arch Wiki page for it. Do note that this would require SPF and DKIM to be setup first. After installation, I edited /etc/opendmarc/opendmarc.conf:

Socket inet:<dmarcsocket>@localhost

After starting the service, enable DMARC filter in postfix (separate with comma):

non_smtpd_milters=inet:127.0.0.1:<dkimsocket>, inet:127.0.0.1:<dmarcsocket>

smtpd_milters=inet:127.0.0.1:<dkimsocket>, inet:127.0.0.1:<dmarcsocket>

The final step is to add a DMARC TXT record in DNS settings as detailed on Arch Wiki page and reload postfix.

Ticking the Boxes

I tested my server by sending test email to check-auth@verifier.port25.com and everything seems to be working. Not to mention that my email no longer gets classified as spam by gmail and I can see my emails passing SPF, DKIM and DMARC checks in 'View Original'. I also get an detailed daily report from gmail due to DMARC. At this point, I am pretty comfortable about ditching all my previous gmail addresses and sticking to my own email. I am also looking into options of self-hosting calenders. Hopefully in the near future I can completely ditch Google for my essential communication needs.

No More Disqusting Disqus

A while back Disqus had a user info breach, which made me reconsider my choice of commenting system. If I am already hosting my own blog and email, why stop there and leave out commenting system to be served by a third-party platform?

The Good, The Bad, and The Ugly

I have mixed feelings for Disqus' idea of turning comments across different sites into a unified social network. Personally, I use most social media services as 'media' rather than a social tool: they are obviously ill-suited for posting large paragraphs (thus the plethora of external links), and even for posting random thoughts, the sheer time it takes to type out a sensible and logically coherent argument (especially on mobile devices) frequntly puts me off. Since I'm so used to being that creepy lurker, I inevitably got into the habit of judging my social media identity: what would I think about this Frankenstein's monster made up of retweets and likes.

Blog Comments work a little differently. I feel more relieved when commenting on a blog: it feels more like a convrsation with the blog owner rather than broadcasting myself to everyone on the Internet. Disqus, however, takes this away by social network-ifying blog comments. I guess the potential upside to Disqus is to attract more traffic, but I do not want my blog comments to become just another social media live feed: if one has valuable comment, the lack of Disqus should not deter him or her from posting it (while I've noticed the opposite happening quite a few times).

Here's comes the ugly part though. Not to mention the fact that embedding JavaScript that I have no control over is a very bad idea, it was only until yesterday did I notice viewer tracking in Disqus is an opt-out system. Since I don't plan on monetizing on my blog, it really isn't worth risking blog viewers' privacy for what Disqus provides. Besides, it really worried me when I realized majority of upvotes in my Disqus comments came from zombie accounts with profile links set to dating sites. Whether these 'disqusting' (bad pun alert) accounts were hijacked due to the security breach or were simply created by spammers is beyond me, but yeah, I don't want these zombies lurking around my blog's comments.

The Search for Replacements

I have decided to selfhost a commenting system and my top priority is to avoid any external service if possible. After careful selection, the two finalists for the job are isso and staticman. Isso is a lightweight comment server written in Python, while staticman is an interesting set of APIs that parses comments into text files and adds them to your site's Github repo. Installing isso means having to deal with databases, which I really dread and would like to avoid at all cost; using staticman allows the site to remain static, yet relies on GitHub's API (and staticman.net's API if I don't host a instance myself). While maintaining an entirely static site is very tempting, I decided to try out Isso first to see if ditching all external sites is worth the effort.

Just for shits and giggles, here's another interesting alternative: Echochamber.js.

Setting Up Isso

The official website provides fairly good documentation already. I installed isso from AUR and enabled it via systemctl. Setting isso up was surprisingly painless(including the part with database), and I used a different configuration than default since I am running isso on the same server. The only issue I encountered is with smtp. By checking the status of postfix, I quickly determined the problem lies in smtpd_helo_restrictions: by disabling the option reject_unknown_helo_hostname, isso can now use the local smtp server without issues. I took some extra effort to customize the CSS template for isso and the comment section looks fairly good now (a lot faster as well).

Happy Commenting!

2017 in Review

2017 is over within the next few hours and I fell obliged to write something about it. Hopefully I won't have too much regrets by the time I finish this post.

What Have I Done

I have been working full time since June. Working life is surprisingly more relaxing than I expected. My daily schedule has seen a great increase in stability: no more 4-hour-sleeping-schedule is an obvious improvement and I can finally set aside time for my interests.

By the end of 2017, I have:

- Set up my new blog and wrote more stuff than 2015 and 2016 combined.

- Been using Emacs for 3 months.

- Been using my own email for 3 months.

- Found out I am pretty good at cooking.

- Been running nearly daily for 6 months. I finished my first half-marathon with a sub 2 hour time.

- Lost 20 pounds since June thanks to running.

Doesn't look too shabby huh? It's been a great year.

What's Next

I've gotten into the habit of listening to Dayo Wong's stand-up comedy from time to time and for some reason one of his earlier shows 跟住去边度 ( What's Next ) left the deepest impression on me. I now also post this question to myself. Here's where I will be in 2018:

- Train for a full marathon and hopefully finish one.

- Write at least 20 blog articles.

- Get the first signature for my PGP key.

- Install Gentoo.

- Read more, watch less.

If I've learned anything from my past failed plans, it would be to always underestimate my own capabilities when planning, so I will just stop my list here and add additional goals as I march into 2018.

Happy New Year and hopefully 2018 will be another spectacular one!

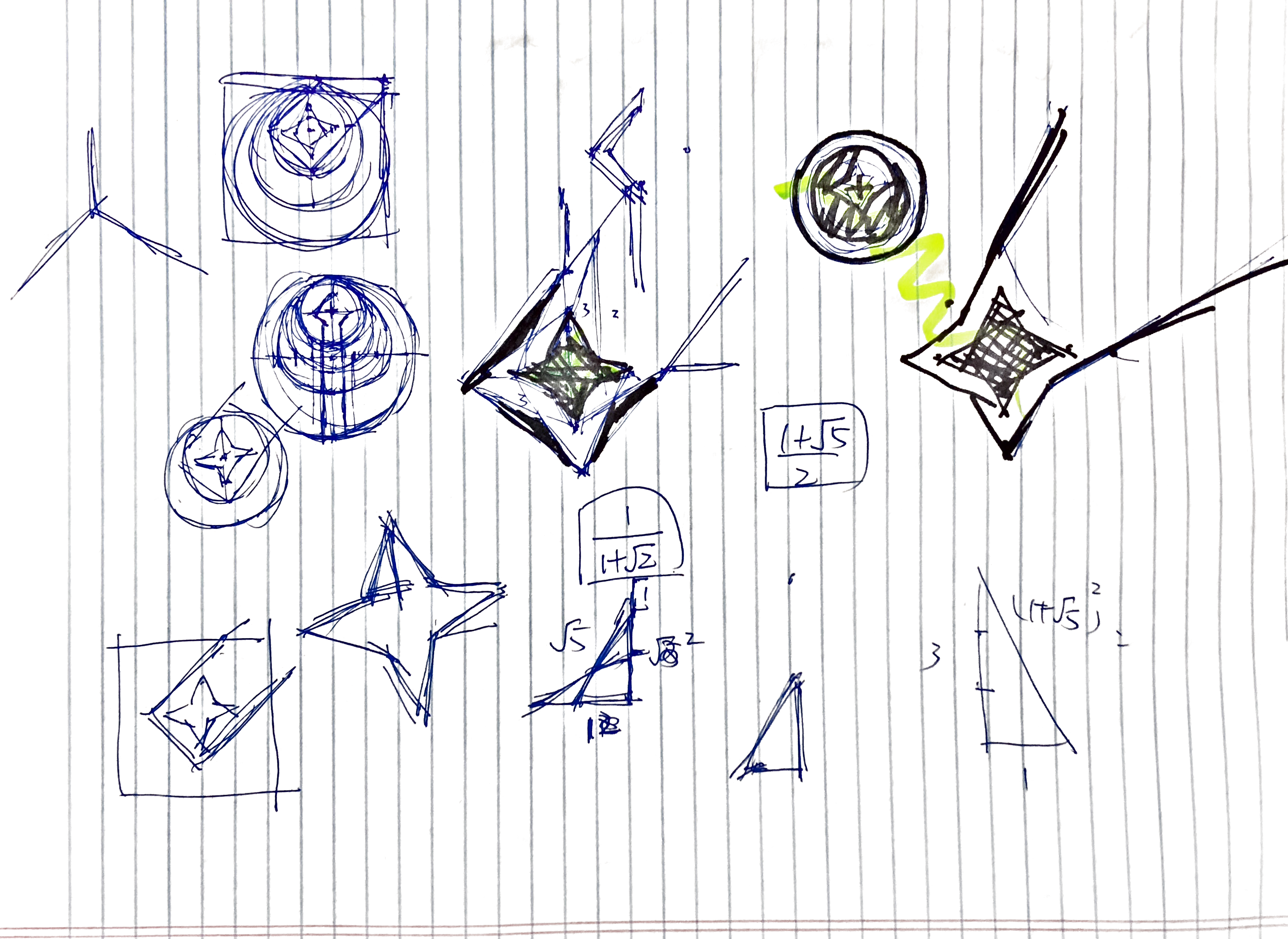

My Very Own Avatar Icon Thingy

I have been using the symbol for the Old Republic from Star Wars as my goto profile picture for quite sometime. In my attempt to maintain a consistent social network profile over multiple websites, I gradually come to realize that profile picture is the most intuitive way to establish a identity (I guess this is also why services like Gravatar would be so popular exist ). In this case, using a picture from Wookiepedia that everyone has access to is probably not the brightest idea. Thus, I set out to create my very own avatar icon thingy.

Since I don't consider myself to have even the least amount of artistic talent, I started out spending quite some time in GeoGebra trying to reconstruct the Old Republic symbol in a systematic way. Filling up my screen with circles and measurements is fun and surprisingly addicting, yet after several hours, I only ended up with a confusing hodgepodge of curves.

It is obvious at that point that I won't be able to recreate anything nearly as complicated as the Old Republic symbol, so I started stripping out a more abstract version of it.

I isolated out the "rising star" part of the original logo and discarded the wing-shaped portions to center the star. Instead of a rising star, I went for the impression of a shooting star and it turned out extremely well (in my opinion at least). With delight, I settled on the logo design a few minutes later. I kept the dark red color scheme (though I actually used #700000 instead of the original #710100 because I hate dangling ones) and added a gray background (#707070) as using white seemed too bright for me.

I also played around with several alternative color schemes, i.e. inverted versions. Maybe I will use these as icons for other projects. So far, I have updated all my actively used social network profiles and changed the favicon of this blog. Hopefully this icon would be unique enough for others to recognize me across different social networks.

Trying Out Mastodon

As mentioned in my previous post, I am not really accustomed to posting on social networks. However, the other day I encountered a term I haven't heard in a long time: micro-blogging. Yes, quite a few social networks, Twitter for instance, is before anything a micro-blogging service. This definition of Twitter makes it immensely more appealing to me: it's a bite-sized blog for random thoughts, funny incidents, and many other pieces of my life that might not fit well with a regular blog post. However, I still find posting on Twitter has the 'broadcasting to the entire Internet' feeling stamped into it. Guess I'll just host my own then.

Mastodon turned out to be one such solution. Mastodon's federated and decentralized nature makes it ideal for someone like me who struggles between building my online identity while minimizing the number of different companies I expose my information to. If people are willing to give away their personal information for fancy profile pictures, then hosting a Mastodon wouldn't seem like such a bad deal.

Installation on Arch Linux

It was kinda surprising that there doesn't exist a Arch Linux specific installation guide for Mastodon. Not that the installation process would be more difficult on Arch Linux than Ubuntu, but installation can be made a lot due simpler to the abundance of packages. Since the official production guide is already fairly comprehensive, I'll just document some Arch Linux specific steps here.

Dependencies

Here's a table detailing all the dependencies and their corresponding packages in Arch Linux. There really is no need to git clone anything. npm was also required in the installation process, but was not listed in the official guide.

| Dependency | Package |

|---|---|

node.js 6.x |

nodejs-lts-boron |

yarn |

yarn |

imagemagick |

imagemagick |

ffmpeg |

ffmpeg |

libprotobuf and protobuf-compiler |

protobuf |

nginx |

nginx |

redis |

redis |

postgresql |

postgresql |

nodejs |

nodejs |

rbenv |

rbenv |

ruby-build |

ruby-build |

npm |

npm |

For rbenv, I needed to add eval "$(rbenv init -)"= to =.bashrc or .zshrc after installation as prompted by the post installation script.

Mastodon

Create user mastodon and to sudoers using visudo.

useradd -m -G wheel -s /bin/bash mastodon

Then I can clone the repository and start installing node.js and ruby dependencies. This is where npm is required. Besides, I encountered a ENONT error when running yarn saying ./.config/yarn/global/.yarnclean is missing, which is resolved by creating the file.

PostgreSQL

In addition to installing the postgresql package, I followed Arch Wiki to initialize the data cluster:

$ sudo su postgres

[postgres]$ initdb --locale $LANG -E UTF8 -D '/var/lib/postgres/data'

After starting and enabling postgresql with systemctl, I can then start the psql shell as the postgres user and create user for Mastodon (use psql command \du to check the user is actually there):

$ sudo su postgres

[postgres]$ psql

[psql]# CREATE USER mastodon CREATEDB;

[psql]# \q

Port selection is customizable in postgresql.service and the port number will be used in .env.porduction customization.

Redis

Pretty much the same drill as postgresql, I installed redis and start/enabled redis.service. The port selection and address that have access can all be configured from /etc/redis.conf.

Nginx & Let's Encrypt

The official production guide covers this part pretty well already.

.env.production

The config file is fairly self-explanatory. The only thing I got wrong the first time is the variable DB_HOST for postgresql. I then obtained the correct path, /run/postgresql, by checking status of postgresql.service.

Scheduling Services & Cleanups

Again, just follow the official production guide. I installed cronie to schedule cron jobs.

My Experience

The web interface is fairly good, I like how I can write toots while browsing timelines instead of been forced to stay at the top of the page. I tried out quite a few Mastodon clients on my phone and I settled on Pawoo, which is built by Pixiv. So far Mastodon feels like a more comfy twitter to me and a platform where I am actually willing to write on. I'm pushing myself to write something on Mastodon every few days. So far it's been mostly running logs, but I'll come up more stuff to post in the future.

One thing I would really like to see though is multilingual post support in Mastodon. A workaround I currently use is appending different tags for Chinese vs. English posts, which not only bloats my toots, but also fragmented my timeline so that it's only 50% comprehensible for most people. Regrettably, it seems that out of the various micro-blogging/social networking services, only Facebook has something similar to this at the moment.

In the footer section, I've replaced Twitter with my Mastodon profile. Feel free to take a peek inside. :P